<< ️The partial synchronization states of collective activity, as well as the spike avalanches realization in systems of interacting neurons, are extremely important distinguishing features of the neocortical circuits that have multiple empirical validations. However, at this stage, there is a limited number of studies highlighting their potential interrelationship at the level of nonlinear mathematical models. >>

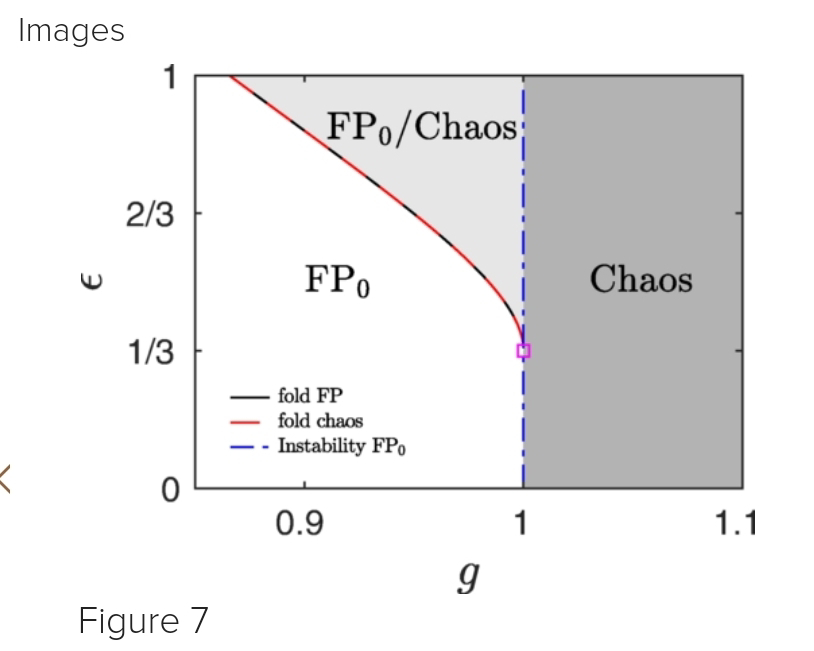

<< ️In this study, (AA) investigate the development of chimera states and the emergence of spike avalanches in superdiffusive neural networks, as well as analyze the system's approach to quasicriticality. >>

<< ️The analysis of the available ideas suggests that partial synchronization states, spike avalanches, and quasicritical neuronal dynamics are all directly implicated in core cognitive functions such as information processing, attention, and memory. Given this fundamental role, the results presented in this (AA) work could have significant implications for both theoretical neuroscience and applied machine learning, particularly in the development of reservoir computing systems. >>

I. Fateev, A. Polezhaev. From chimera states to spike avalanches and quasicriticality: The role of superdiffusive coupling. Phys. Rev. E 113, 014215. Jan 20, 2026.

Also: network, brain, neuro, behav, chimera, random, walk, walking, in https://www.inkgmr.net/kwrds.html

Keywords: gst, networks, neuronal network models, chimera, random, walk, walking, avalanches, neuronal avalanches, collective behaviors, criticality.